-

Felix Schreyer authoredFelix Schreyer authored

- Analyzing REMIND model outputs

- 3.1 Access Cluster

- 3.2 Load mif file as Magpie Object

- 3.3 Load mif file as quitte Object

- 3.4 Load gdx file as magpie object

- 4.1. Generation of summary and validation pdfs

- 4.2 A Summary of Results

- 4.3 The Whole Range of Validation

- 5.1. AppResults

- 6.1. Execution of model-internal output scripts via the REMIND configuration file

- 6.2. Execution of model-internal output scripts in the command window

Analyzing REMIND model outputs

Felix Scheyer (felix.schreyer@pik-potsdam.de), Isabelle Weindl (weindl@pik-potsdam.de), Lavinia Baumstark (baumstark@pik-potsdam.de)

- 1. Introduction

- 2. Model output files

- 3. Loading and analyzing model output in R

- 4. Automated model validation

- 5. Interactive scenario analysis

- 6. Model-internal R-scripts for output analysis

- Introduction ===============

After having successfully started and accomplished a simulation run, the next step is to evaluate the simulation results.

There are plentiful ways to look at and analyze REMIND results. This tutorial gives an overview on different tools and options that can be used.

For each simulation, results are written to a folder that is created automatically as a combination of model title name and the current date inside the output folder of the model.

- Model output files =====================

As mentioned in section 2, the two main output files you will typically care about are the fulldata.gdx and the REMIND_generic_NameofYourRun.mif files in the output folder of your run. The fulldata.gdx is the actual technical output of the GAMS optimization and contains all the variables, parameters, sets etc. (the differences between these GAMS objects are explained in tutorial 2) of the REMIND model. However, this gdx-file ia mainly interesting once you actually work on the GAMS code and want to check specific the variables and their values. If you simply want to look at REMIND results of your run or use it for further data analysis and plotting, you would open the REMIND_generic_NameofYourRun.mif which is basically a csv-file in a certain standardized format used in the Integrated Assessment Modeling community.

Looking at the REMIND_generic_NameofYourRun.mif, the column scenario gives the name of the run (that you specified in the first column of your config file when starting the run). The column region provides an three-letter acronym of the region (e.g. EUR -> EU, SSA -> Sub-Saharan Africa). The column variable represents the variable you are looking at (To avoid confusion with the above: It does not necessarily represent a variable in the GAMS code of REMIND. The mif-file is a synthetized output generated from fulldata.gdx by post-processing Rscripts from the remind library). Scrolling through the variable column, you will get an impression of the myriad of outputs the REMIND model permits you to explore.

- Loading and analyzing model output in R ==============================

3.1 Access Cluster

To analyze your model results, you can load the output of the mif-file into a local session in RStudio. To get the file from the cluster, you can download the mif-file, for example, via WinSCP. You can read more details on how to access the cluster here:

https://redmine.pik-potsdam.de/projects/mo/wiki/Getting_ready_to_use_the_Cluster

3.2 Load mif file as Magpie Object

You can load the mif-file of your run into a magpie object from the magclass R library by

out <- read.report("Path/to/your/mif-file", as.list = F)This will load the content of the mif-file into a magpie object which is an array that we specifically use to handle inputs and outputs of REMIND and MagPIE. By

str(out)you see the dimension of the magpie object. Magpie objects are basically arrays, you can look at specific entries, for example, like this

out["EUR", c("y2020","y2050"), "FE|Transport (EJ/yr)"]Instead of these objects from the R library magclass, you can also use dataframes from the R library quitte. Arrays are generally better for doing calculations, while dataframes are better for plotting. You will find out after a while which way works best for you. The quitte dataframes are probably better for output analysis because this is a lot about plotting. For reading in the .mif file as a dataframe, you have to load the library quitte and then run the command

3.3 Load mif file as quitte Object

mifdata <- read.quitte(PathtoYourMifFile.mif)The data is now stored in the quitte object mifdata. In RStudio, selecting it and hitting F2 will show you its content. Usually, you will want to filter for some regions, variables, periods that you are interested in. For example, like this:

df <- filter(mifdata,

region %in% c('EUR','LAM'),

variable %in% c('SE|Electricity'),

scenario %in% c('BAU'),

period %in% c(2005,2030,2050)) 3.4 Load gdx file as magpie object

Finally, you can also load the content of the fulldata.gdx via the function readGDX of the gdx library (https://github.com/pik-piam/gdx) into a magpie file to directly access the GAMS output. Here is an example of how readGDX is used:

pe2se <- readGDX(gdx,"pe2se")

Mport <- readGDX(gdx,c("vm_Mport"),field="l",format="first_found")

demPE <- readGDX(gdx,name=c("vm_demPe","v_pedem"),field="l",restore_zeros=FALSE,format="first_found")Here gdx is the path to the gdx file, while the second argument is the name of the GAMS object you want to load. It is possible to extract various GAMS objects like "sets", "equations", "parameters", "variables" and "aliases" with readGDX. With the arguemtn field="l", you can select the levels of endogenous variables. With field="m" you can extract the marginal values of these variables.

In the following, we present several other tools and scripts that were developed to facilitate the output analysis:

- Automated model validation ===============================

4.1. Generation of summary and validation pdfs

The automated model analysis (summary and validation) is an important example of output analysis based on model-internal scripts (see section 6). If these scripts are executed (either by selection via cfg$output as explained in 6.1. or by execution via command window as explained in 6.2.), standard evaluation pdfs are created. They validate numerous model outputs with historical data, either visually or via statistical tests.

4.2 A Summary of Results

For a first overview and for not getting lost in the huge amount of variables you can look at the REMIND_summary_[title].pdf. This .pdf-file will give you an overview on the model results and guides you through the story.

4.3 The Whole Range of Validation

A standard validation PDF REMIND_validation_[title].pdf consists of hundreds of evaluation outputs. By evaluating the model outputs on such a broad level rather than focusing only on key outputs, it allows getting a more complete picture of the corresponding simulation. The table of contents of the validation pdf gives a good overview about the breadth of model outputs that can be simulated with a REMIND standard simulation, even though the validation pdf only shows a subset of possible model outputs.

- Interactive scenario analysis ==================================

The automated model validation is a good tool for visually evaluating a broad range of model outputs. However, comparison between model runs, i.e. between different scenarios, is rather difficult and inconvenient if the model results are scattered across different large PDF files.

5.1. AppResults

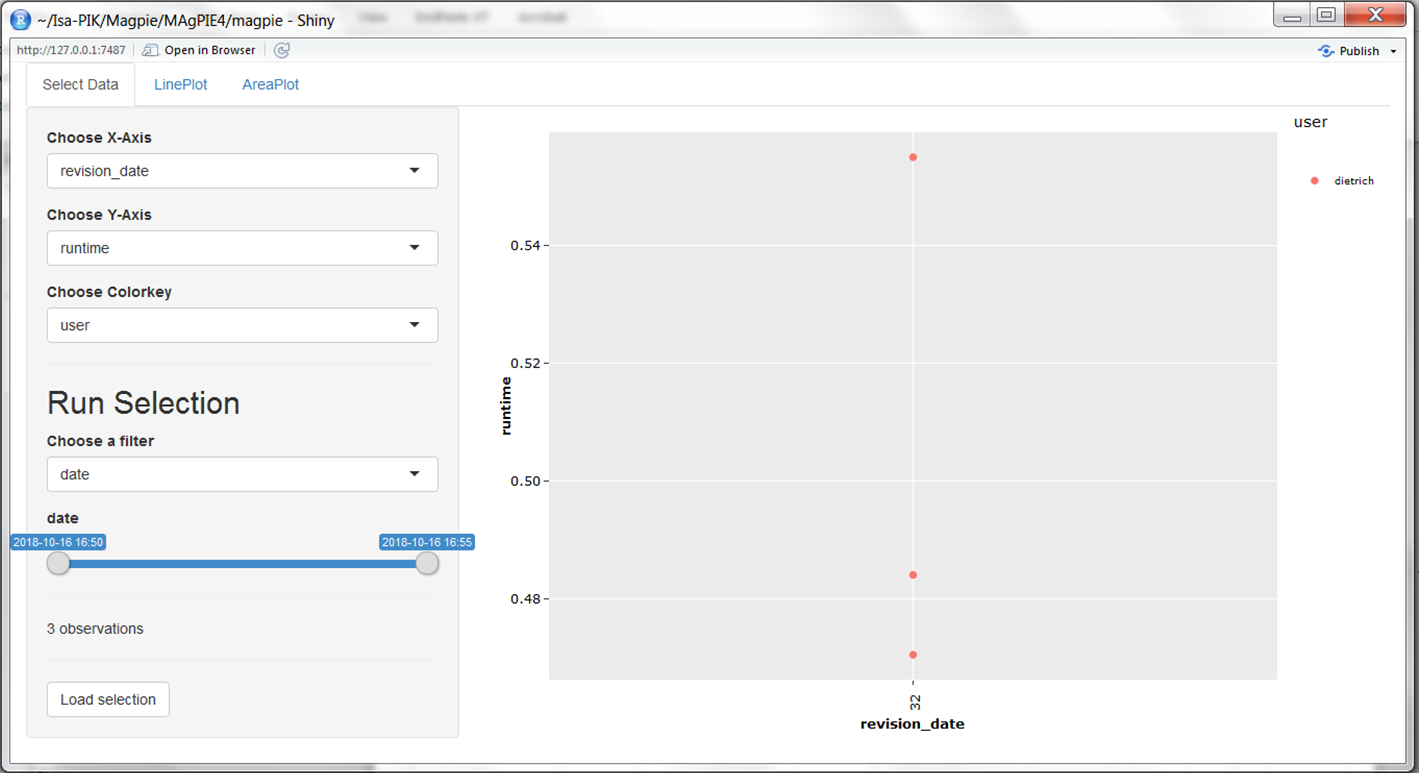

To overcome this issue, we developed the interactive scenario analysis and evaluation tools appResults and appResultsLocal as part of the library shinyresults (https://github.com/pik-piam/shinyresults), which show evaluation plots for multiple scenarios including historical data and other projections based on an interactive selection of regions and variables. You can use this tool by running the following R command, which will automatically collect all runs and visualize them:

shinyresults::appResults()This command opens an interactive window, where you can select the simulations that you want to evaluate.

Interactive Results app

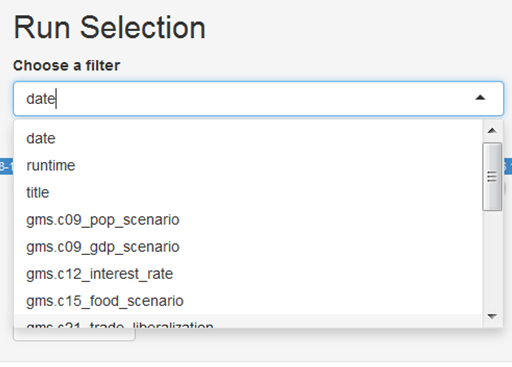

You can use filters to select a subset of all runs stored in the output folder of the model, for example by searching for runs that have been finished at a certain day or by searching for keywords in the title of the simulation runs:

Run selection by using a filter

If you run the following command in the output folder of your local computer you get an interactive window containing the results of this output folder:

shinyresults::appResultsLocal()Another tool for analyzing model output on your local computer is the scneario tool from the mip library. You can open it from the R console by:

mip::scenTool()- Model-internal R-scripts for output analysis =================================================

6.1. Execution of model-internal output scripts via the REMIND configuration file

In the file config/default.cfg, it is possible to indicate which R-scripts are executed for output analysis after a model run is finished. Scripts evaluating single runs are stored in the folder scripts/output/[title-of-the-run]. In the default REMIND configuration, the scripts reporting, validation (see section 2.3), validationSummary (see section 2.3), rds_report (to be used in appResults; see section 3), and dashboard are selected via cfg$output:

cfg$output <- c("reporting","validation","rds_report","validationSummary","dashboard")6.2. Execution of model-internal output scripts in the command window

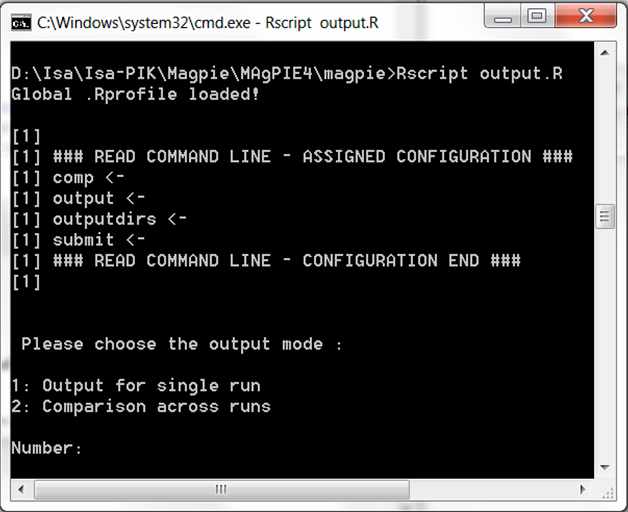

Output scripts that are included in the folders scripts/output/single and scripts/output/comparison can also be executed via command window. To do so, windows users can open a command line prompt in the REMIND model folder by using shift + right click and then selecting open command window here option.

In the command prompt, use the following command:

Rscript output.RYou are now asked to choose the output mode: 1: Output for single run 2: Comparison across runs

Executing output scripts via command window

In both cases, you can choose from the list of available model simulations, for which runs you want to conduct the model output analysis.In the next step, you can interactively indicate which model-internal output scripts you want to execute.

Now, the selected scripts are executed. After completion, the results are written in the respective folder of the simulation run (combination of model title name and the current date inside the output folder of the model).

- Analysis of outputs with the remind library ===============================================

If you want to go beyond visual output analysis and predefined output evaluation facilitated by scripts in the model folders scripts/output/single and scripts/output/comparison, you can use the functionality of the R package remind. This library contains a list of common functions for extracting outputs from the REMIND model which are also the basis for the generation of the automated validation pdf. For a quick overview on the functions which are included in the library, you can scan the folder remind/R.

For making yourself familiar with this library, you can open a R/RStudio session and set the REMIND model folder as working directory. This can be done by using the following command:

setwd("/path/to/your/remind/model/folder")Then, load the library and call the help pages:

library(remind)

?remindYou can click on the index and search for interesting functions. All functions used to generate the reporting start with "reporting*.R".